Degrading the Network at the DARPA Robotics Finals

View from the Network Operations Center During the DARPA Robotics Finals

Much has been written about the robots, the competition, and the tasks at the DARPA Robotics Finals. This article examines the unique elements of the Network Operations Center (NOC) and the Degraded Communications. IWL was part of the network infrastructure team preparing for the finals for 20 months prior to the event. Here is our unique perspective.

Up until the DARPA DRC Finals (June 5 & 6, 2015), robots performed specific tasks and were teleoperated. However, for the Finals, the robots were challenged to act as autonomously as possible. The robots had to perform their tasks (navigating through a debris field, drilling a hole, etc.) with minimal human assistance, just as they would in a real-world disaster.

Most robots are merely pre-programmed to do a task, or, they are “teleoperated” meaning, remotely controlled by a human operator. That’s fine for many applications, but disaster scenarios present special challenges. In Hurricane Sandy and the Fukushima nuclear accident, phones were down, and Internet connectivity non-existent. Often, a command center could not reach the first responders.

In This Article:

DARPA Selects Maxwell Pro from IWL

How the Degraded Communications Worked

Team Strategies to Deal with the Degraded Communications

Effects of the Degraded Communication

NOC team intensely monitored all tracks, robots, network elements

**Team THOR using Maxwell Pro In their garage**

DARPA Selects Maxwell Pro from IWL

To ensure each robot would act as autonomously as possible, without a “man behind the curtain” calling the shots, DARPA chose IWL to dynamically simulate network blackouts during the competition.

The objective: create a situation suggestive of the possible network conditions that could occur during a disaster — skinny network pipes and intermittent fat network pipes.

DARPA wanted to nudge the competing teams to limit control input from the operator and enable the robot to identify and perform tasks.

The method: Permit only intermittent delivery of video and 3D imaging from the robot to the operator, so that the real eyes on the task were the robot’s, and not the human operator’s.

IWL provided Maxwell Pro Network Emulators to simulate degraded communication with limited network bandwidth, and dropped packets. The Maxwell Pros impaired the communications between the robots and their operators, limiting teleoperation.

Working side by side with DARPA, SPAWAR, and the robotics teams, we’ve created a first class robotics testing environment that can now be replicated in other environments. I believe we have taken robotics to a whole new level.

-Karl Auerbach, Chief Technical Officer at IWL

**Tim Krout, NOC Leader**

**View of the tracks from the grandstands**

How the Degraded Communications Worked

There were four tracks or courses operating simultaneoulsy so that four robots could go through the course at the same time. The networking support for each track was independent of the other tracks; each track and network was isolated. One Maxwell Pro was used for each track.

Each robot had eight tasks to perform. Some of the tasks were outside “the building” (see photo) and the rest were inside the building. The rationale for this is that in a disaster, a robot outside a building would encounter no walls that could block network communications. However, once a robot went through a door and entered a building, network degradation was far more likely. To emulate this scenario, once inside, the high bandwidth data from the robot to the operator was significantly impeded. However, before entering and after exiting the building, the high bandwidth data from the robot to the operator was rate limited, and not significantly impeded.

All data flowed over the same communications channels. Traffic was partitioned into logical “links” as described below.

Link 1

Each robot communicated wirelessly to a wireless access point. The wireless access point was connected to the wired network.

The Operator Control Station (OCS) communicated with its Robot through logical Link 2 and Link 3 by way of a Degraded Communications Emulator (DCE) (IWL’s Maxwell Pro Network Emulator).

Link 2

Link 2 was a unidirectional link from the robots through the Degraded Communications Emulator (Maxwell Pro) to the Operators. The robots sent telemetry information consisting of video images, 3D sensing data, etc. via this link.

Link 2 operated in two modes:

Link 2 “Outside” Mode

While the robot attempted the “outside the building” tasks, Link 2 was “rate limited” to 300 Mbit/s:

Task 1 Drive

Task 2 Egress the vehicle

Task 3 Open and pass through the door

Task 8 Climb the Stairs,

Link 2 supported about 300 Mbit/sec of data. Link 2 had minimal latency to the OCS.

View of the inside tasks

View of the final two tasks

Link 2 “Inside” Mode

Once inside the building, Link 2 was rate limited to 300 Mbits/s, however, this was interspersed with 100% drop of all the packets (called the “blackout period”). The length of the blackouts varied on the order of 30 seconds. The scheduling of the blackouts were not known by the teams in advance. These data bursts and blackouts occurred while the robot was “inside” performing or not performing the following “inside” tasks:

Task 4 Rotate the Valve

Task 5 Cut through the wall

Task 6 Surprise Task – which was different each round (pull down a lever, unplug a socket and replug a different socket).

Task 7 Move through the rubble (debris field)

At the start of a blackout, the 100% drop was applied to incoming packets. If packets were already received and queued (up to 256 full packets might be buffered), they were purged.

At the beginning of the run, no blackouts lasted more than 30 seconds. The average length of the blackouts decreased during the course of a run, and after 45 minutes into the run, the blackouts no longer occurred.

The intervals when traffic could flow at 300 Mbits/s were always one second long.

Note that Link 2 was a 300 Mbits/s wireless link, subject to normal wireless link packet loss, unrelated to the degraded communication imposed by Maxwell Pro. There were additional radiation sources, specifically, the sun, aircraft flying overhead, etc. that could affect the performance of the wireless link.

Link 3

Link 3 was an always-on, bidirectional link between the OCS and the robot. This link permitted the operators to monitor and control the robot. Link 3 was rate limited based on port numbers. Link 3 allowed a data rate of up to 9600 bits/s and allowed TCP and UDP traffic. Link 3 also carried bidirectional traffic for Internet Control Message Protocol (ICMP) which had a throughput limit of 1024 bits/s. This meant that the typical “ping” time between an operator and its robot was 1.25 seconds.

The operators could send commands to the robot via Link 3.

5.4 GHZ Wifi Traffic Prohibited

All 5.4 GHZ wifi traffic was prohibited except for the robot control network. The 5.4GHZ band was used for wireless connectivity from the top of the grandstands out to the robot tracks. To avoid any radio frequency (RF) interference that could cause unexpected degradation, the network was actively monitored.

Team Strategies to Deal with the Degraded Communications

Obviously, the unidirectional Link 2 from the robot to the operator only allowed bursts of data to get through in “inside” mode. Some teams made certain that they were able to squeeze an entire visual/3D data sample into about one-third of a second. They were sending three samples per second in the hope that one would get through. Then, whenever the burst window opened, the team would get at least one full sample through. Other teams had on-going, small, probe packets to detect when the burst window opened; then they used the low speed channel to tell the robot to begin sending data. The latter strategy required keeping the low speed channel very clear so that the “please send it now” message would get to the robot quickly. The teams definitely tailored their networking code to the blackout patterns that were specific to this event. However, they could not predict in advance when the whiteout would occur; this information was kept secret from the teams.

Effects of the Degraded Communication

The operators were located in the Team Garage about one-quarter mile away from the robot test track. The operators were discouraged from tele-operating the robots because of the low bandwidth of only 9600 bits/s on Link 3 and limited visual cues from the robots on Link 2. An operator could see a high-res image or 3D scan intermittently on Link 2, and the operator would then make a decision on what command to give the robot on Link 3, for example “move forward two inches”. The more dependent the operator was on getting the visual information on Link 2 and issuing a command on Link 3, the more the robot’s performance suffered.

From our view in the NOC, the more successful robots tended to use the least amount of data communication. This suggests that these robots actually had more autonomy than their competitors.

NOC Team Intensely Monitored all Tracks, Robots, Network Elements

The DARPA Robotics Finals had 25 teams competing for major prizes; it was imperative that the network and the degraded communications perform as flawlessly as possible.

The NOC staff extensively monitored the network and degraded communications operations from multiple viewpoints.

Graphs were produced on a second to second basis for Links 2 and 3 in both directions, to and from all the interfaces, and to and from all the teams. About 80 data points per second per track were collected, graphed, and monitored. This was completely automated software with NOC staff continously monitoring and checking for anything unusual.

Radio Frequency metrics were monitored including power levels, data rates, etc. from all the wireless equipment.

SNMP monitoring occurred for all network devices. The extensive monitoring proved quite valuable when a power outage occurred taking down the network. The NOC team quickly determined the source was a faulty UPS. It was immediately replaced and the network was up and running about 20 minutes later.

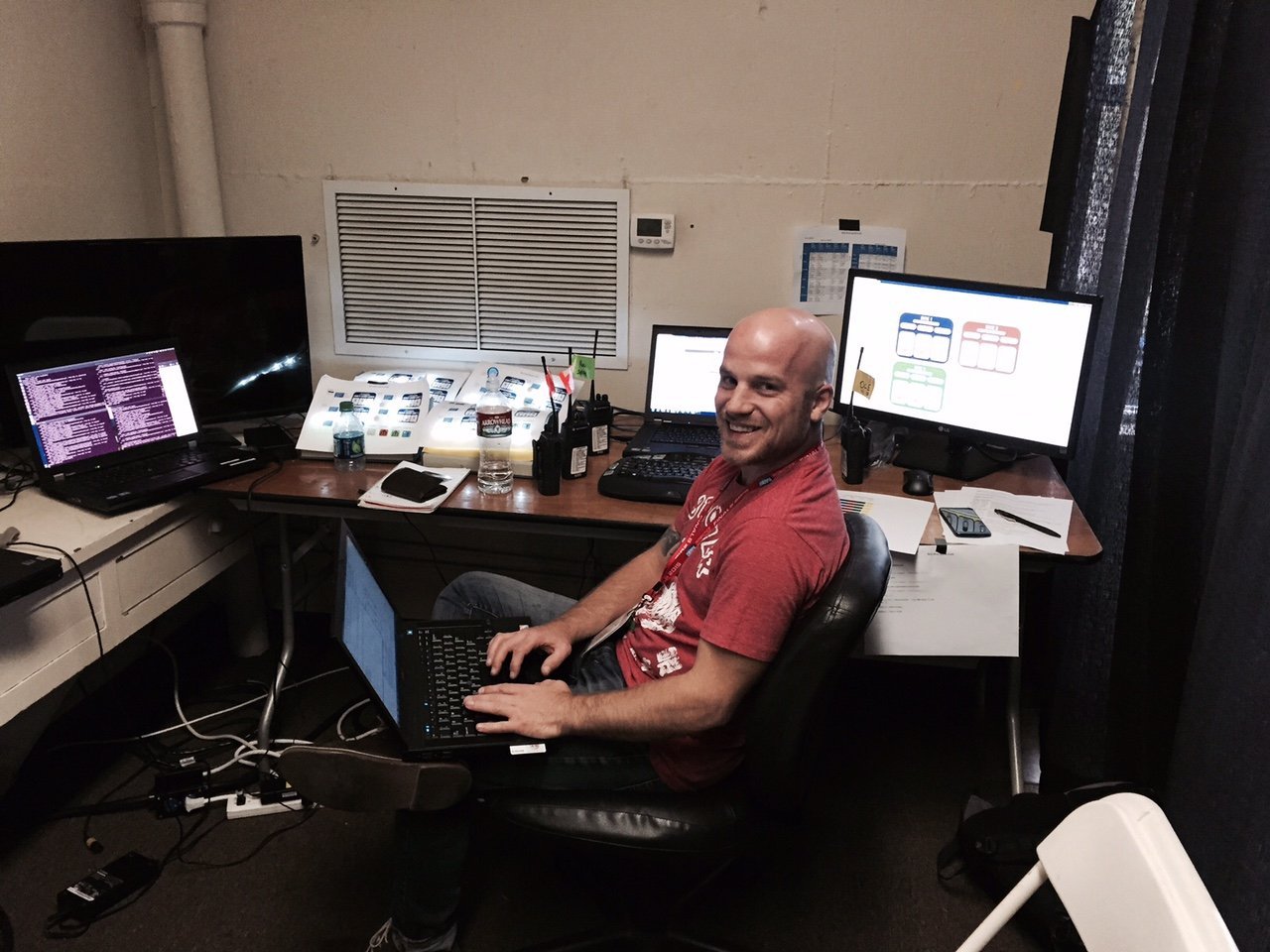

**Richard, monitoring the Degraded Communications inside the NOC**

I am very proud of our work with DARPA for this very important humanitarian goal. This work will ultimately help all of us in disaster response preparation.

– Chris Wellens, President & CEO

List of Tasks:

Drive a car down a simple path

Exit the car

Open a door and walk through it

Turn a valve in one complete circle

Identify a solid, black circle on a wall, select a power drill and use the drill to cut out the circle from the drywall

The surprise task – which was different each day – push down a lever and unplug a magnetized plug, and plug it into another socket

Move through a debris field by moving debris out of the way, or powering through the debris

Climb up a set of stairs, about five feet wide with a handle on only one side